HomeRobot: Open-Vocabulary Mobile Manipulation

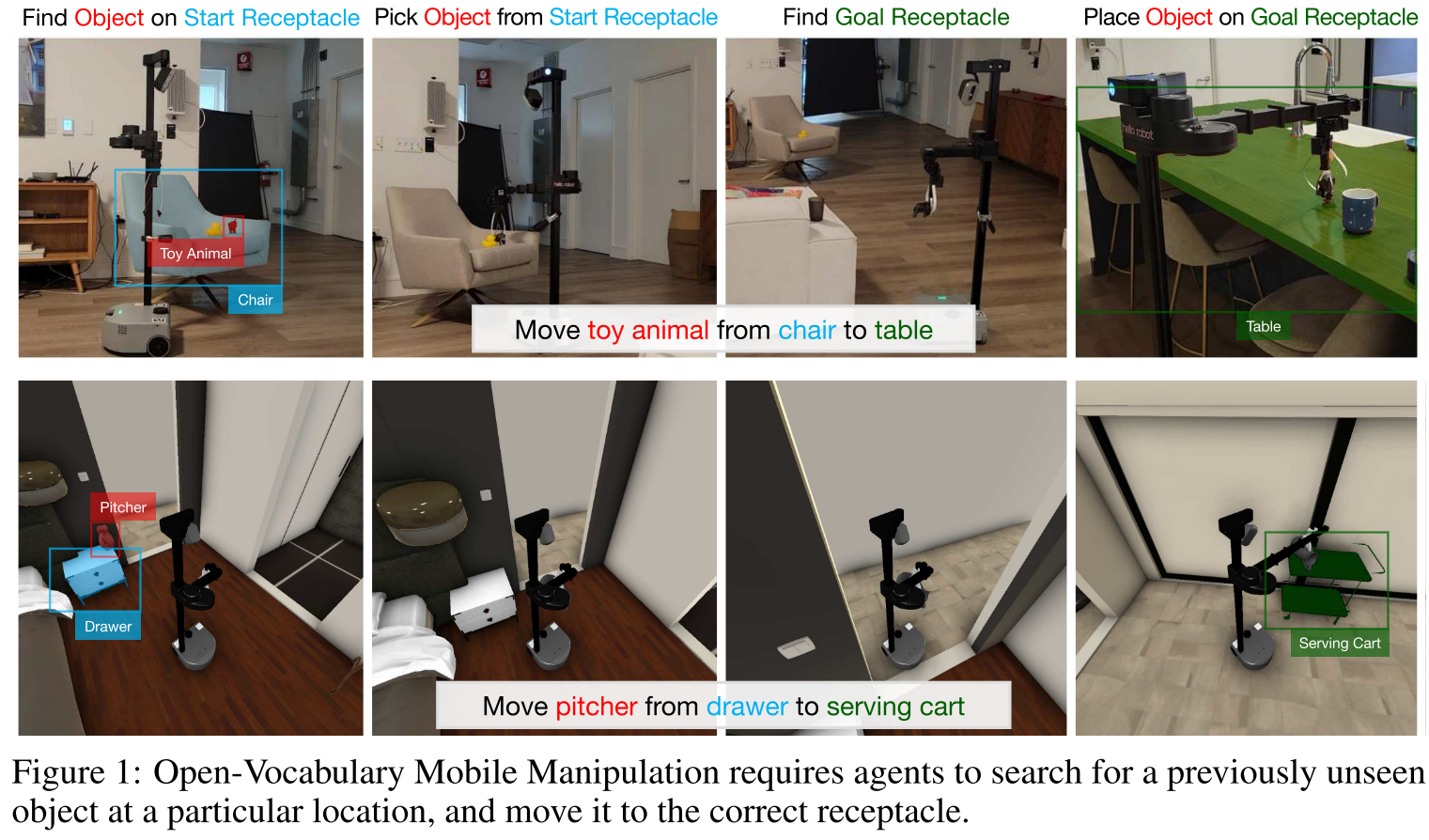

Open-Vocabulary Mobile Manipulation (OVMM) is the problem of picking any object in any unseen environment, and placing it in a commanded location.

OVMM涉及到了感知、语言理解、导航和操作

an agent navigates household environments to grasp novel objects and place them on target receptacles

“Move (object) from the (start_receptacle) to the (goal_receptacle).”

机器人初始化在未知环境的home environment中,给定object,起始地和目的地,机器人实现对object的搬运:

- finding the start_receptacle with the object

- picking up the object

- finding the goal_receptacle

- placing the object on the goal_receptacle.

Related Work

Open Vocabulary Navigation

[9] Open-vocabulary queryable scene representations for real world planning. arXiv, 2022.

[15] Conceptfusion: Open-set multimodal 3d mapping. arXiv, 2023.

[11] Clip-fields: Weakly supervised semantic fields for robotic memory. arXiv, 2022.

[12] Usa-net: Unified semantic and affordance representations for robot memory. arXiv, 2023.

[16] Beyond the nav-graph: Vision and language navigation in continuous environments. In European Conference on Computer

Vision (ECCV), 2020.

fully open-vocabulary scene representation

[11] Clip-fields: Weakly supervised semantic fields for robotic memory. arXiv, 2022.

[12] Usa-net: Unified semantic and affordance representations for robot memory. arXiv, 2023.

[15] Conceptfusion: Open-set multimodal 3d mapping. arXiv, 2023.

object-goal navigation [1, 2], skill learning [24], continual learning [61], and image instance navigation [25]

[24] Spatial-language attention policies for efficient robot learning. arXiv, 2023.

[25] Navigating to objects specified by images. arXiv, 2023

[61] Evaluating continual learning on a home robot, 2023.

[1] Objectnav revisited: On evaluation of embodied agents navigating to objects. arXiv, 2020.

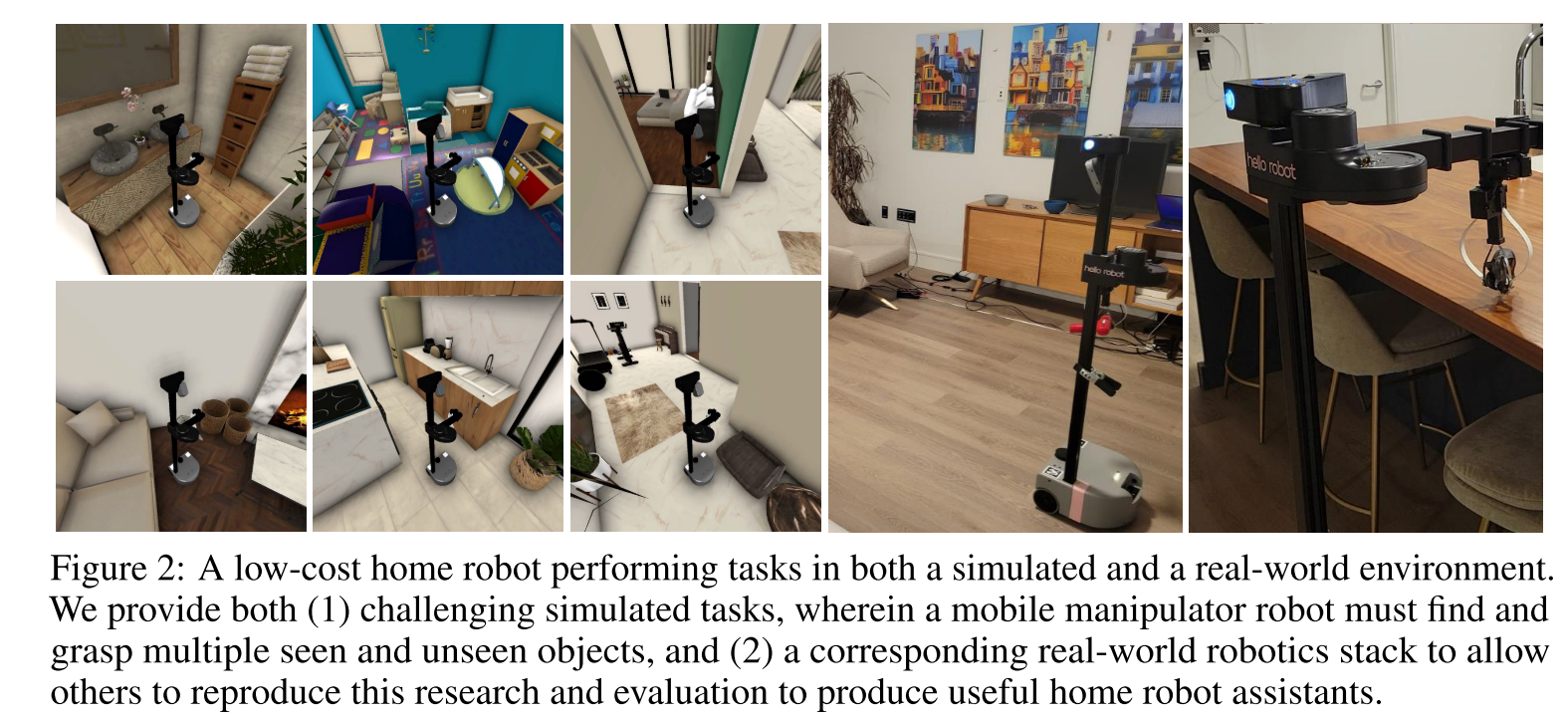

Simulation Data

部分object在训练阶段不可见,所有放置物体的容器在训练阶段都是可见的,但是会在测试时放在不同的地方

Habitat Synthetic Scenes Dataset (HSSD):

[19] Habitat Synthetic Scenes Dataset: An Analysis of 3D Scene Scale and Realism Tradeoffs for ObjectGoal Navigation. arXiv, 2023

200+Scenes,18K objects

本文使用HSSD的子集,由60个场景组成,38个用于训练,12用于验证和10用于测试

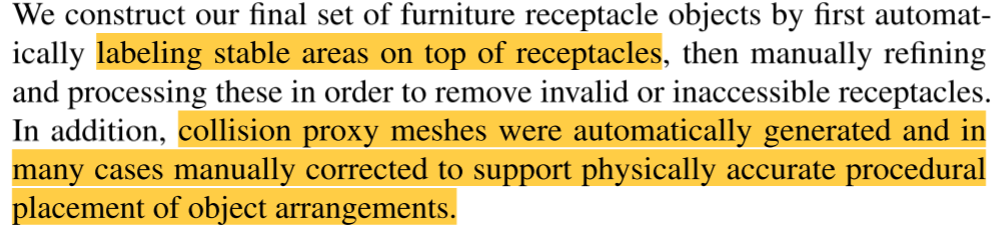

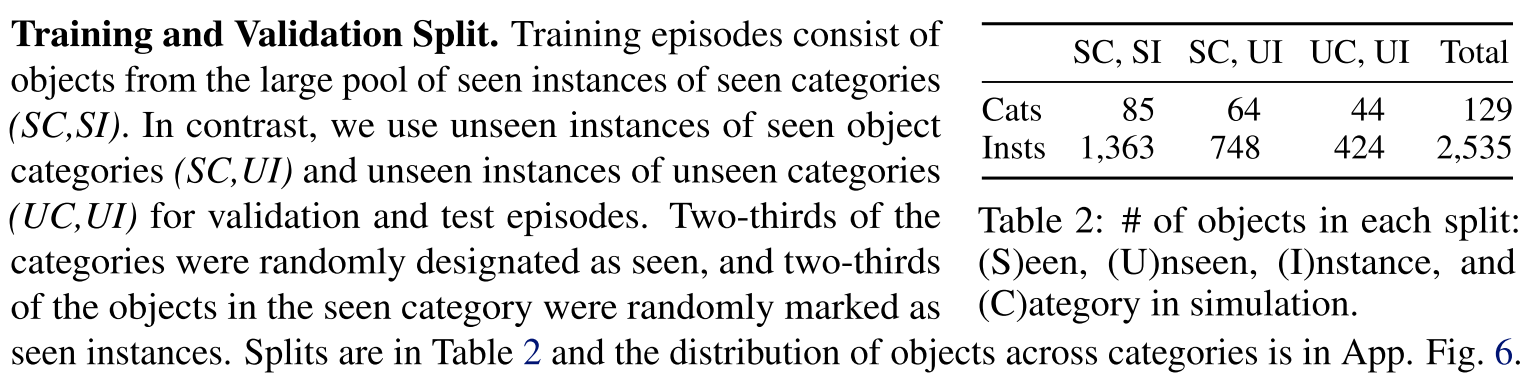

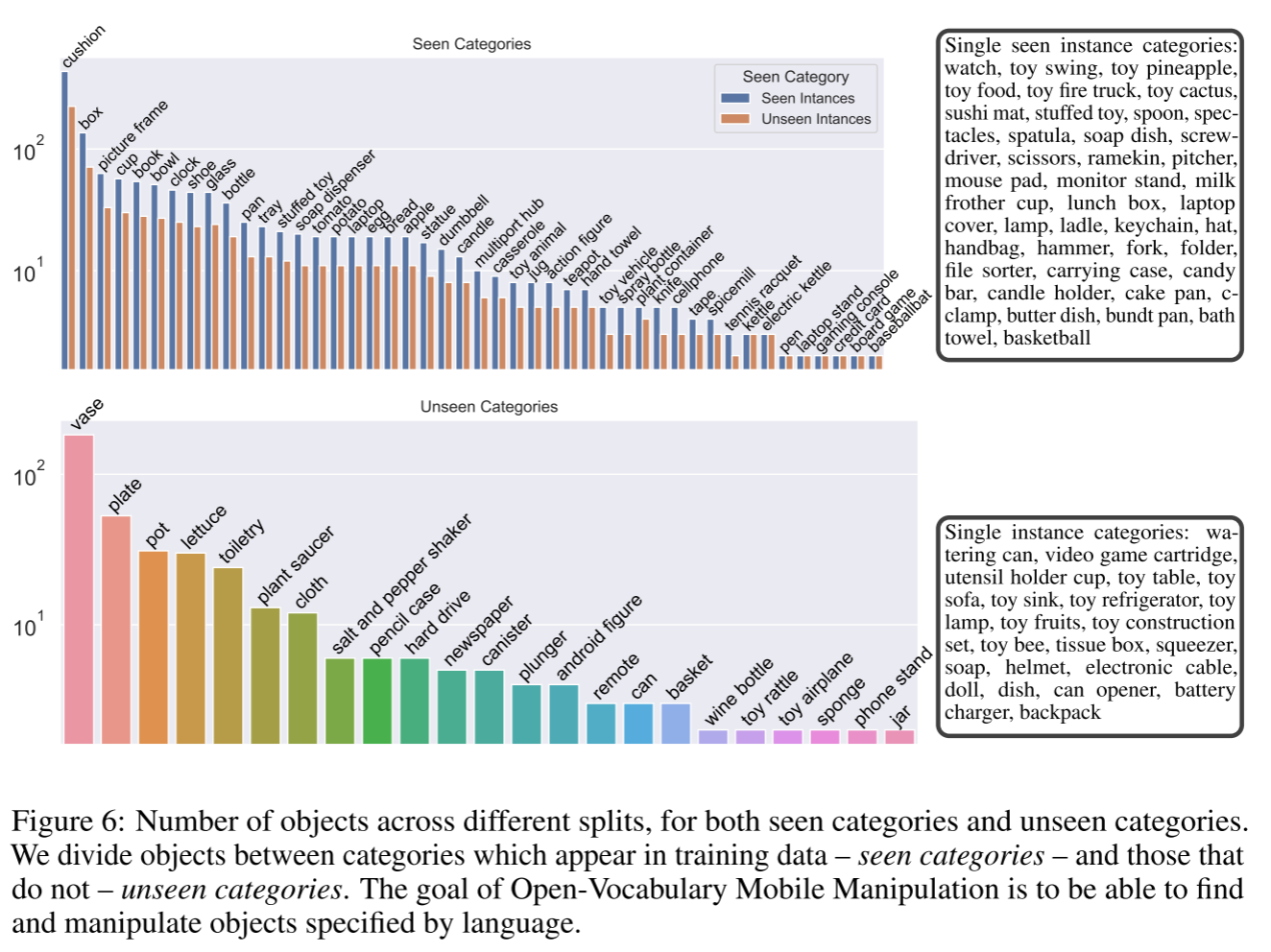

总共注释了129个类别的2535个对象,标注了HSSD数据集中出现的21个不同类别的receptacle,并对receptacle进行了进一步标注

Episode Generation Details

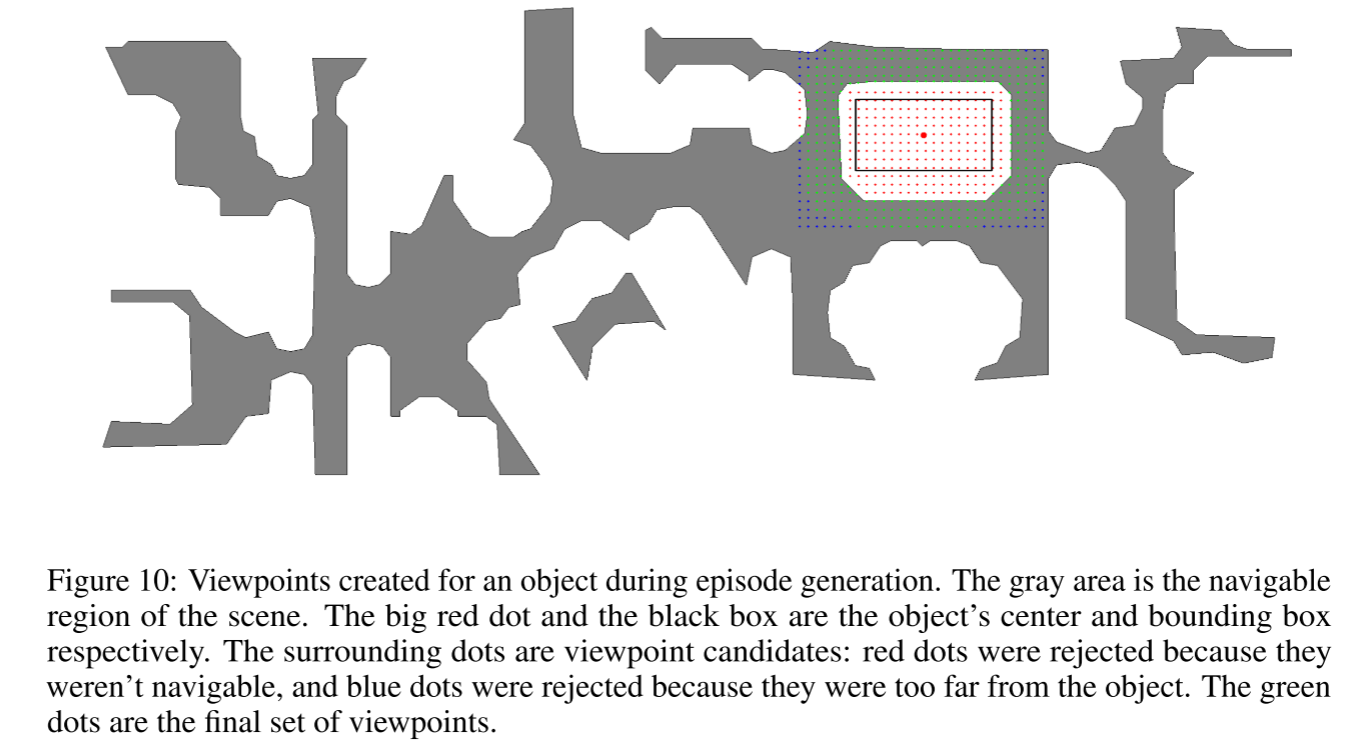

生成一系列candidate viewpoints,每个viewpoints于一个特定的start_receptacle或goal_receptacle相关联,也就是代表了机器人可以看见receptacle的一个邻近位置(小于1.5米)

灰色代表可导航区域,大红点和黑框分别是对象的中心和边界框。周围的点是candidate viewpoints:红点被拒绝是因为它们不可导航,而蓝点被拒绝是因为它们离对象太远。绿色是the final set of viewpoints。

- objects categories

Baseline Agent Implementation

OVMMAgent

将完成OVMM任务需要的技能分解为:

- FindObj/FindRec: 对物体和容器进行定位

- Gaze: 移动到足够接近对象以抓住它,并调整头部方向以获得对象的良好视图。凝视的目的是提高抓取的成功率。

- Grasp: 捡起物体,只提供了high-level的action

- Place: Move to a location in the environment and place the object on top of the goal_receptacle.

heuristic baseline

use a well known motion planning technique [2] and simple rules to execute grasping and manipulation actions

[2] Navigating to objects in the real world. arXiv, 2022.

reinforcement learning baseline

learn exploration and manipulation skills using an off-the-self policy learning algorithm, DDPPO

While RGB is available in our simulation, our baseline policies do not directly utilize it; instead, they rely on predicted segmentation from Detic at test time

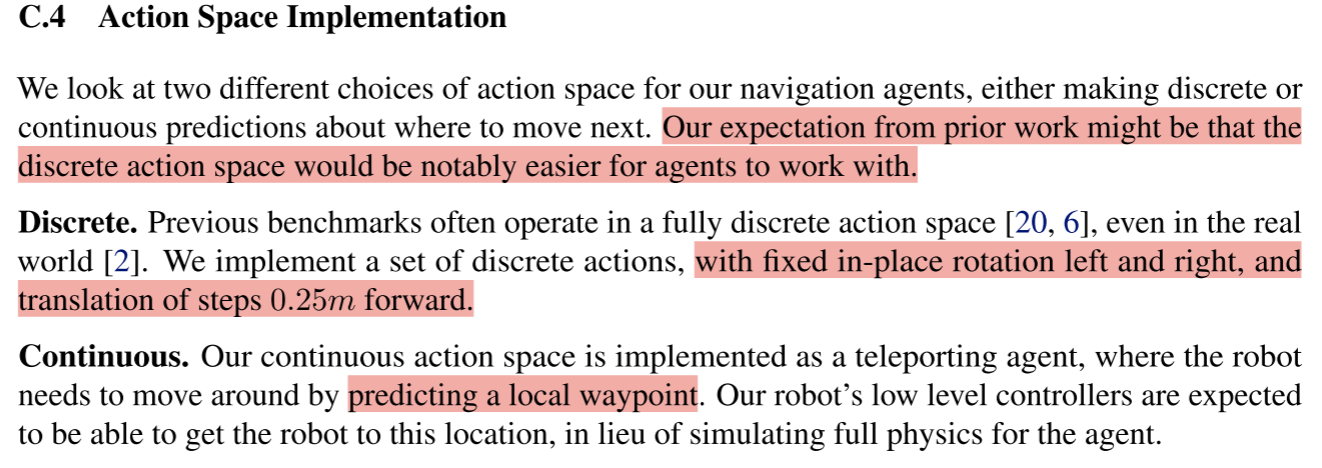

Action Space Implementation

In simulation, this is implemented as a check against the navmesh - we use the navmesh to determine if the robot will go into collision with any objects if moved towards the new location, and move it to the closest valid location instead.

HomeRobot Implementation Details

three different repositories within the open-source HomeRobot library:

- home_robot: Shared components such as Environment interfaces, controllers, detection and segmentation modules.

- home_robot_sim: Simulation stack with Environments based on Habitat.

- home_robot_hw: Hardware stack with server processes that runs on the robot, client API that runs on the GPU workstation, and Environments built using the client API.

Pose Infomation

We get the global robot pose from Hector SLAM [99] on the Hello Robot Stretch [22], which is used when creating 2d semantic maps for our model-based navigation policies.

Low-Level Control for Navigation

Heuristic Grasping Policy

启发式

Heuristic Placement Policy

Navigation Planning

- Semantic Mapping Module和20年文章一样

使用启发式的探索策略

- Navigation Planner使用Fast Marching Method

- 由于较远时可能观察不到要找的小物体,因此在active neural mapping的planning policy基础上添加了两条规则:

Navigation Limitations

- 开放类别意味着更难实现抓取和避免碰撞

- Fast Marching Method输出轨迹的是离散的,且没有考虑朝向

- 无法处理运动障碍物

- 将robot视作了一个圆柱体

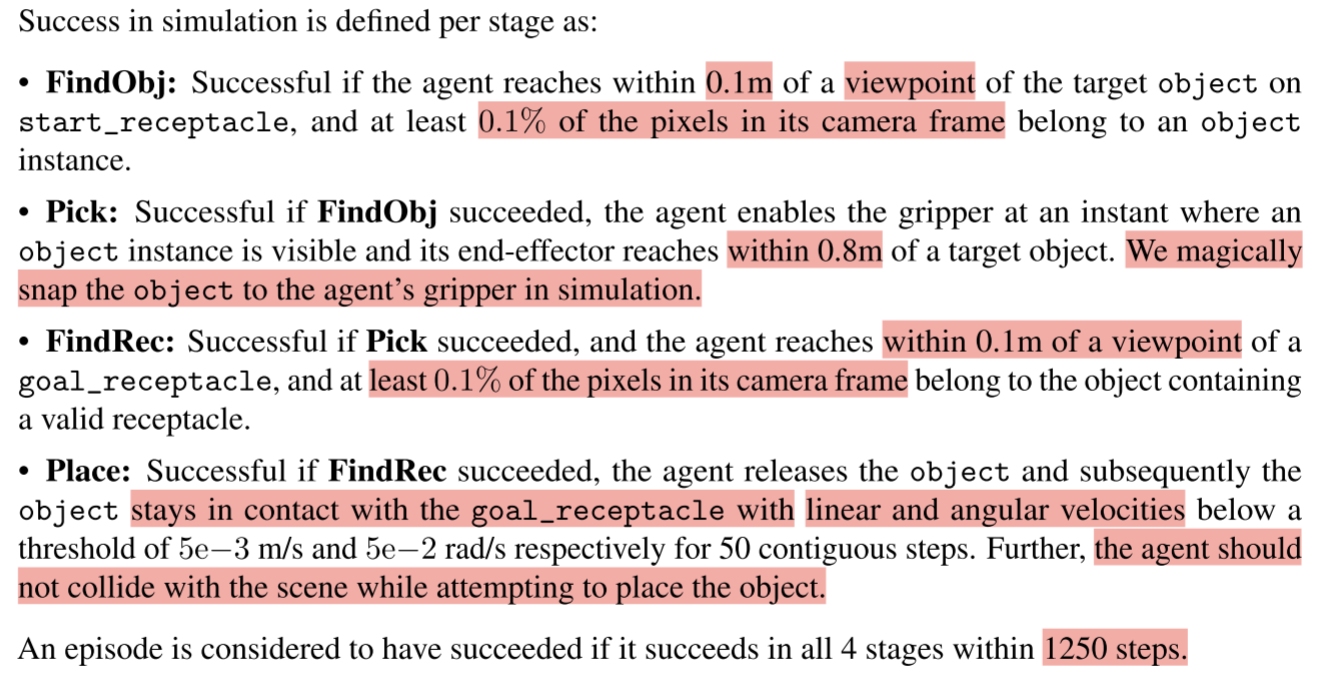

Metrics

Simulation Success Metrics

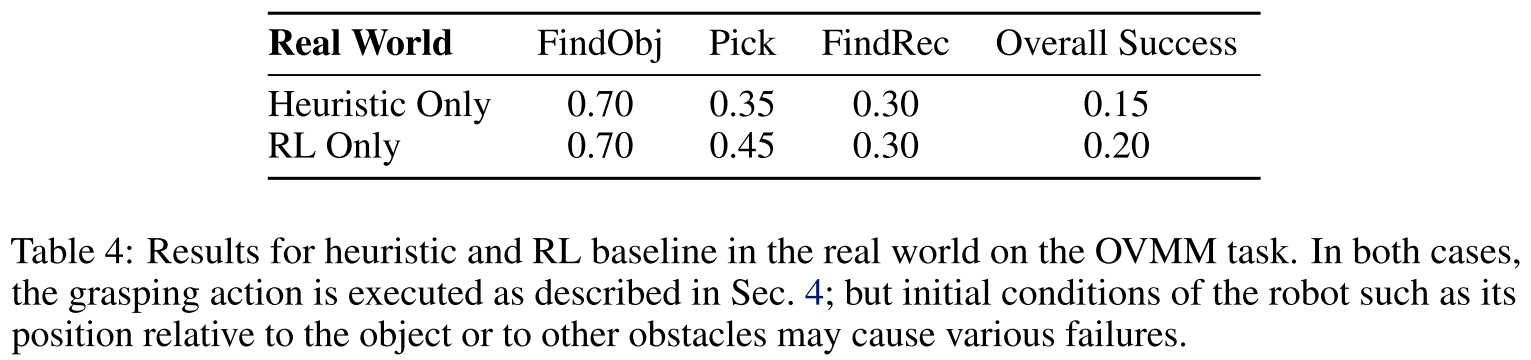

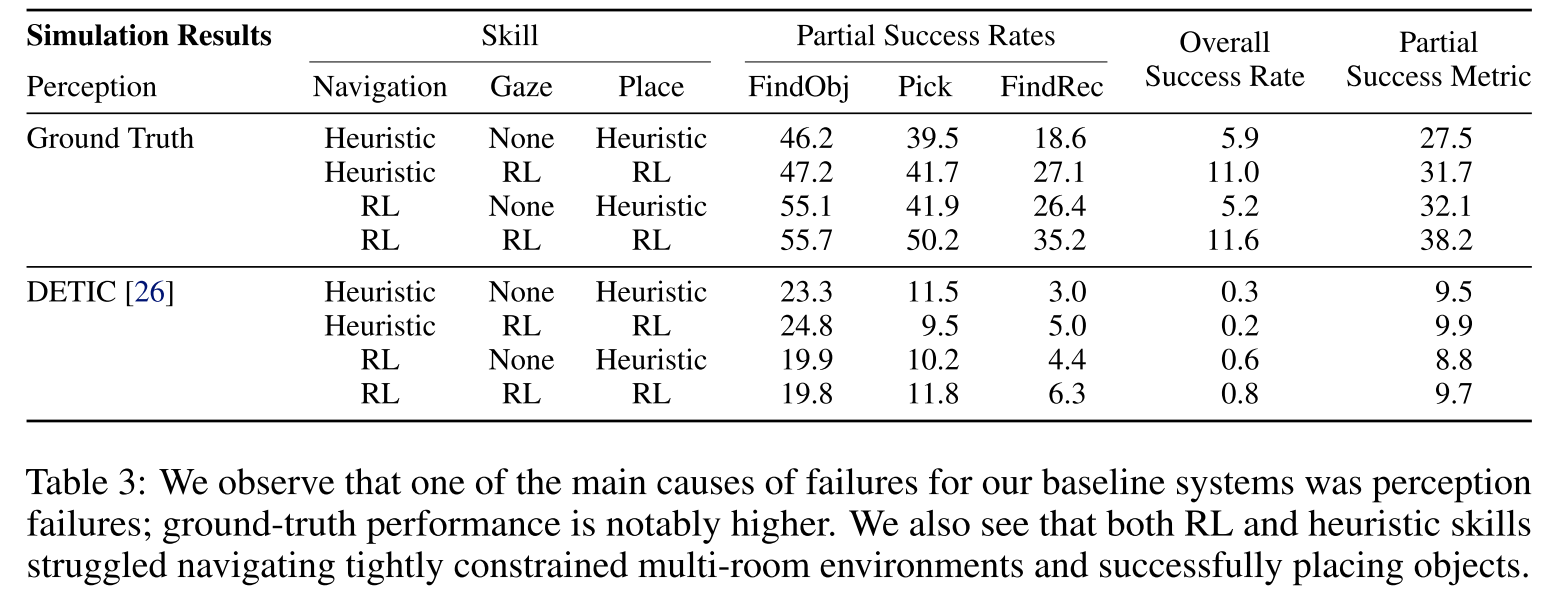

Results

失败分析

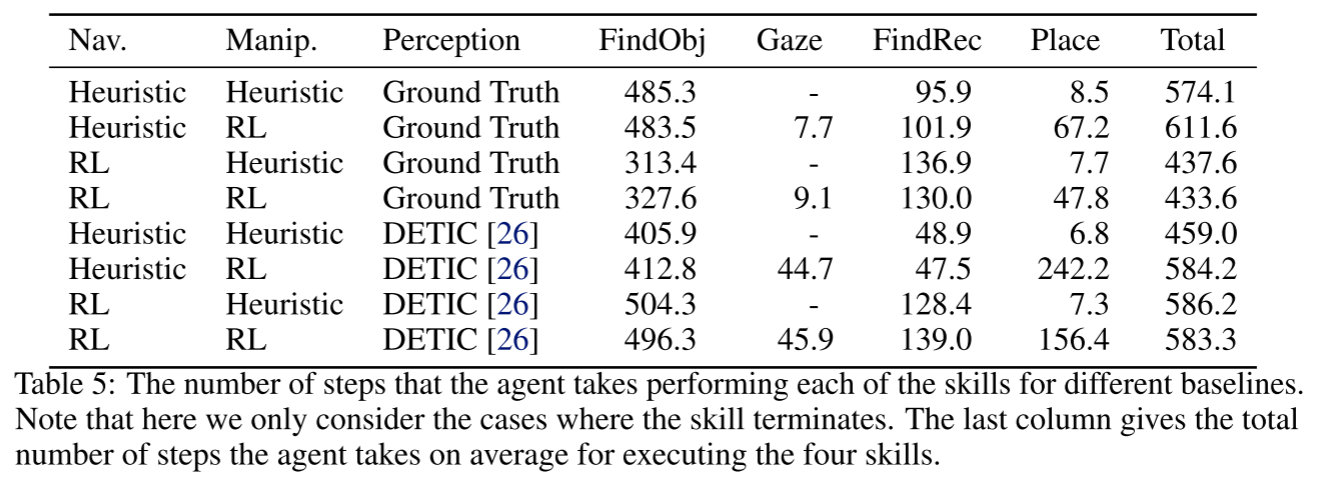

不同阶段所用的时间步数

Limitation

- 仿真中没有物理模拟抓取

- We consider full natural language queries out-of-scope

- we do not implement many motion planners in HomeRobot, or task-and-motion-planning with replanning, as would be ideal